Business Intelligence (BI), d.h. der Einblick und das Verständnis in einzelne Geschäftsprozesse und deren Zusammenhänge, ist seit Jahren ein Schwerpunktthema großer Firmen und ihrer CIOs. Es hat sich von einem "nice-to-have" zu einem "mission critical" Thema entwickelt. Die Gründe dafür sind zum einen die Notwendigkeit, Optimierungspotentiale und Wettbewerbsvorteile durch BI zu erzielen, und zum anderen die steigenden Möglichkeiten, enorme Datenmengen zu sammeln und auszuwerten.

Data Warehousing (DW) ist eine Disziplin, die meist mit BI einhergeht (aber nicht notwendigerweise einhergehen muss) und die (Daten-) Grundlagen für BI zur Verfügung stellt. Daher werden beide Begriffe oftmals und fälschlicherweise synonym verwendet. Auch die wirtschaftliche Bedeutung der Disziplinen und das damit einhergehende Marketing nähren diese Vereinfachung.

Der Vortrag beabsichtigt, den Außenstehenden anhand konkreter Beispiele und real existierender Szenarien und Produkten an die Themen BI und DW heranzuführen. Ein grundlegendes Verständnis relationaler Datenbanken, ihrer Stärken und Schwächen ist hilfreich, aber nicht notwendig, um dem Vortrag zu folgen.

Tuesday, January 24, 2012

Real World Business Intelligence and Data Warehousing

Tuesday, June 14, 2011

CAP Equivalent for Analytics?

- sophistication: in simple terms, this refers to the complexity of SQL statements needed for the analysis, e.g. complex joins, multiple sorts etc.

- volume: this refers to data volume involved in the analysis.

- latency: here, he means the combination of time to load and transform data (ideally: 0) + query processing time (ideally: sub-second).

- costs: actually, it's meant to be the costs for hard- and software but I'd add that those costs are a symptom of hard- and/or software architecture complexity.

Tuesday, April 12, 2011

NoSQL Options in Analytics and Data Warehousing

(Here, data warehousing serves as a guiding example for a generic business application)

Recent years have seen many initiatives to ease up ACID properties in order to translate the gained freedom into other benefits like better performance, scalability or availability. Typically, the result of such approaches are trade-offs like the ones manifested in the CAP theorem. The latter provides a systematic and theoretically proven way to look at an option for a certain trade-off: mostly to sacrifice consistency in order to assert availability and partition tolerance.

These are purely technical properties that are generic and applicable to all applications for which availability and (network) partitions constitute a huge (economical) risk. However, an application (that runs on top of a high volume or large scale DBMS) itself frequently provides a number of opportunities to simplify the liability on ACID. One particular example is the software that manages tables, data flows, processes, transformation etc. in a data warehouse. One major goal of such software is to expose data that originates from multiple source systems – each of which presumably consistent for itself – in a way that makes sense to the end user. Here, "making sense" means (a) that the data is harmonized (e.g. by transformations, cleansing etc.) but also (b) that it is plausible*.

What does the latter mean? Let’s consider the following example: typically, uploads from the various source systems are scheduled independently of each other. However and frequently, scenarios require the data from all relevant systems to be completely uploaded to provide a consistent (plausible) view. A typical example is the total costs of a business process (e.g. a sales process) are only complete (i.e. "consistent" or "plausible") if the costs of the respective sub-processes (e.g. order + delivery + billing) are uploaded to the data warehouse. A possible consequence of such a situation is that costs are overlapping or only partially uploaded. This translates into a non-plausible effect to the end user in the sense that – depending on the moment when he looks at the date – varying amounts for the total costs appear. Normally, such a result is technically correct (i.e. consistent) but not plausible to the end user.

Frequently, such effects are tackled by implementing plausibility gates that allow data to proceed to the next data layer of a data warehouse only if all the other related data has arrived. In other words: plausibility in this context is a certain form of consistency, namely one that, for instance, harmonizes consistency across multiple (source) systems. A plausibility gate is then a kind of managed COMMIT to achieve a plausible state of the data in the next data layer. It is a perfect example of a property or fact that exists within a business application and that can be exploited to manage consistency differently and in a more performance optimal way. For example: individual bulk loads can be considered as part of a wider "(load or data warehouse) transaction" (which comprises all related loads) but do not have to be considered in an isolated way. Here is clearly an option to relieve some constraints. The source of this relief is to be found in the business process and its sub-processes that underlie the various loads.

In the context of SAP's In-Memory Appliance (SAP HANA), SAP is currently investigating such opportunities within its rich suite of business applications. Leveraging such opportunities is considered and expected to be one of the major sources for scaling and increasing performance beyond the more generic and technology-based opportunities like main memory, multi-core (parallelism) and columnar data structures. We suggest looking into options within the classic world of business applications in order to mimic what has been successfully implemented in non-classic applications, especially in the Internet-scale area where NoSQL platforms like Hadoop have been successfully adopted.

PS (27 May 2011): By coincidence, I've come across this article which describes the consistency problem within a sales process. One example is that the customer expects to be treated equally and independent from which sales channels he/she have used, like returning a product in a high street shop even if it has been bought online. The article talks about the consistency problem in the context of sales management software. However, this is exactly what happens also in a DW context and can therefore be easily translated.

*The term plausible is used in order to distinguish from but also to relate to consistent.

Friday, April 8, 2011

Is Data like Timber?

For quite some time, I'm playing with this idea of comparing data with some raw material (like timber) and information with some product made from that raw material (like furniture). This helps to suggest (a) that data and information are related but not the same thing and (b) that there is processing inbetween similar to converting wood to furniture. With this blog, I like to throw this comparison out into the public, also to hopefully trigger a good discussion that either identifies weaknesses of this comparison or develops the idea even further.

So let's picture the process on how a tree becomes a cupboard, a book shelf or a table:

- Trees grow in the forrest.

- A tree is cut and the log is transported to a factory for further processing.

- At the factory, it is stored in some place.

- It is the subsequently processed into boards. Various tools like saws, presses etc. are used in this context.

- The boards are frequently taken to yet another factory that applies various processing steps to create the furniture. Depending on the type of furniture (table, chair, cupboard, ...), a high or a small number of steps, complex or less complex ones are necessary. Additional material like glass, screws, nails, handles, metal joins, paint, ... are added. Processing steps are like cutting, pressing, painting, drilling, ...

Now when you consider what happens to data before it becomes useful information displayed in a pivot table or a chart then you can identify similar steps:

- Data gets created by some business process, e.g. a customer orders some product.

- For analysis, the data is brought to some central place for further processing in a calculation engine. This place can be part of a data warehouse or of an on-the-fly infrastructure, e.g. via a federated approach that retrieves the data only when it is needed.

- At this central place, it is stored in a central place, e.g. persistent DB tables or a cache, where it can potentially "meet" data from other sources.

- Data is reformated, harmonised, cleansed, ... using data quality, data transformation tools or plain SQL. Simply consider the various formats for a date like 4/5/2011, 5 Apr 2011, 5.4.11, 20110405, ...

- Data is enriched and combined with data from other sources, e.g. the click stream of your web server combined with the user master data table. Only in this combination you can, for instance, tell how many young, middle aged or old people look at your web site. In the end, data has become useful information.

Monday, November 8, 2010

ACID Properties, Related Implications, In Particular Shared-Nothing Scalability

There is interesting blog on Daniel Abadi's blog site called The problems with ACID, and how to fix them without going NoSQL. It summarizes the current discussions and approaches around weakening the ACID properties of transactional DBMS in order to work around limitations (e.g. as outlined by the CAP theorem) and obstacles regarding scalability on shared-nothing architectures. Here, replication, as the most prominent method to achieve high availability, receives special attention. The blog seems to outline a research paper that has been presented at VLDB 2010.

The fundamental idea is to look at the isolation property in ACID. The authors argue that isolation still provides the freedom to (conceptually) execute transactions in an arbitrary sequential order. This arbitration translates into arbitrary - i.e. different - results depending on the chosen order. All results comply with the isolation property. Now, if the arbitration was removed, i.e. if a specific order was predefined, then a lot of the complexity of the (commit) protocols required for durability seem to go away. An example is the two-phase commit protocol.

I can follow the argument in the blog. I've not yet delved into all the details but I believe it's an interesting and promissing thought. At least, you get out a good summary of the current state of the discussion.

Saturday, October 16, 2010

The SAP BW-HANA Relationship

With the announcement of HANA*, some customers, analysts and others have raised the question on how HANA relates to BW with a few of them even adding their own, home made answer in the sense that they speculate that HANA would succeed BW. In this blog, I like to throw in some food for thought on this.

Currently, HANA's predominant value propositions are

- extremely good performance for any type of workload

- a real-time replication mechanism between an operational system (like SAP ERP) and HANA

Let's match those for a moment with the original motivation for building up a decision-support system (DSS) or data warehouse (DW). In the 1990s, a typical list of arguments in favour of such a system looked like this:

- Take load off operational systems.

- Provide data models that are more suitable and efficient for analysis and reporting.

- Integrate and harmonize various data sources.

- Historize - store a longer history of data (e.g. for compliance reasons), thereby relieving OLTPs from that task and the related data volumes.

- Perform data quality mechanisms.

- Secure OLTPs from unauthorized access.

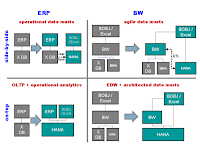

Installing a DW is typically motivated by a subset or all of those reasons. There is a particular sweet spot in that area, namely a DW (e.g. an SAP BW) set up for reasons 1. and 2., but with all the other arguments not being relevant as it is connected to basically one** operational system (like SAP ERP). Here, no data has to be integrated and harmonized, meaning that the "T-portion" in ETL or ELT is void and thus that we are down to extraction-load (EL) which, in turn, is ideally done real-time. So value proposition ii. comes along very handy. Critics will argue that such systems are no real data warehouses ... and I agree. But this is merely academic as such systems do exist and are a fact. So, in summary, there is a good case for a certain subset of "data warehouses" (or reporting systems) that can be now built based on HANA with i. and ii. as excelling properties - see the top left scenario in figure 1 below.

Now, will this replace some BWs? Yes, certainly. In the light of HANA, a BW with a 1:1 connection to an ERP might not be the best choice anymore. However, will this make BW obsolete in general? No, of course not. As indicated above: there is a huge case out there for data warehouses that integrate data from many heterogenous sources. Even if those sources are all SAP - e.g. a system landscape of multiple unharmonized ERPs, e.g. originating from regional structures, mergers and acquisitions - then this still requires conceptual layers that integrate, harmonize and consolidate huge volumes of data in a controlled fashion. See Jürgen Haupt's blog on LSA for a more comprehensive discussion of such an approach. I sometimes compare the data delivered to a data warehouse with timber delivered to a furniture factory: it is raw, basic material that needs to get refined in various stages depending on the type of furniture you want to produce - shelves might require less steps (i.e. "layers") than a cupboard.

Finally, I believe that there is an excellent case for building a BW on top of HANA, i.e. to combine both - see the bottom right scenario in the figure below. HANA can be seen as an evolution of BWA and, as such, this combination has already proven to be extremely successful: BW 7.0 and BWA 7.0 have been in the market for about 5 years, BW 7.30 and BWA 7.20 have pushed the topic even further albeit mainly focusing on the analytic layer of BW (in contrast to the DW layer). When you continue this line of thought and when you assume that HANA is not only BWA but also able to comply with primary storage requirements (ACID etc.) then the huge potential opens up to support, for example,

- integrated planning (BW-IP): atomic planning operators (used in planning functions) can be implemented natively inside HANA, thereby benefitting from the scalability and performance as seen with BWA and OLAP and also from avoiding to transport huge volumes of data from a DB server to an application server,

- data store objects (DSOs): one can think of implementing such an object natively (maybe as a special type of table) in HANA, thereby accelerating performance critical operations such as the data activation.

This is just a flavour of what is possible. So, overall, there is 4 potential and interesting HANA-based scenarios that I see and that are summarized in figure 1. I believe that HANA is great technology that will only come to shine if the apps exploit it properly. SAP, as the business application company, has a huge opportunity to create those apps. BW (as a DW app) is one example which has started quite some time ago on this path. So the question on the BW-HANA relationship has an obvious answer.

* High Performance ANalytic Appliance ** The case remains valid even if there are a few supporting data feeds, e.g. from small complementary sources.